Visualizing the gradient descent method

Por um escritor misterioso

Last updated 28 março 2025

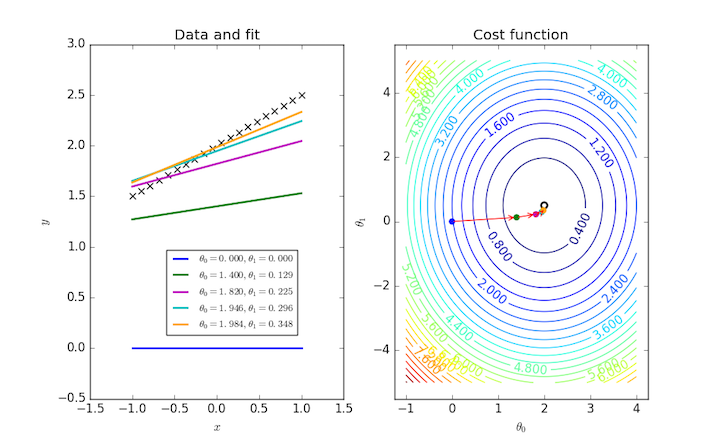

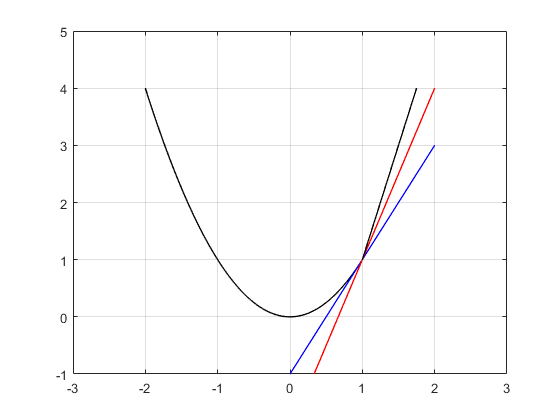

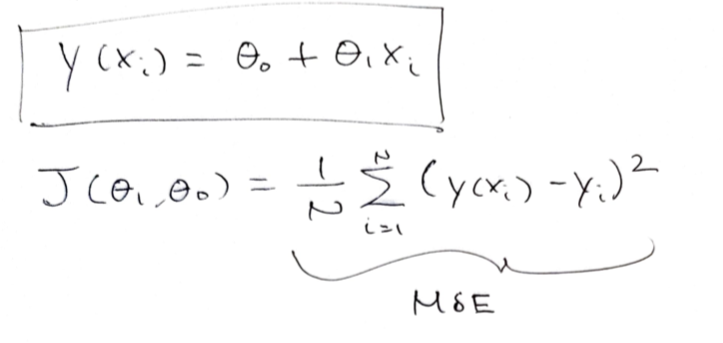

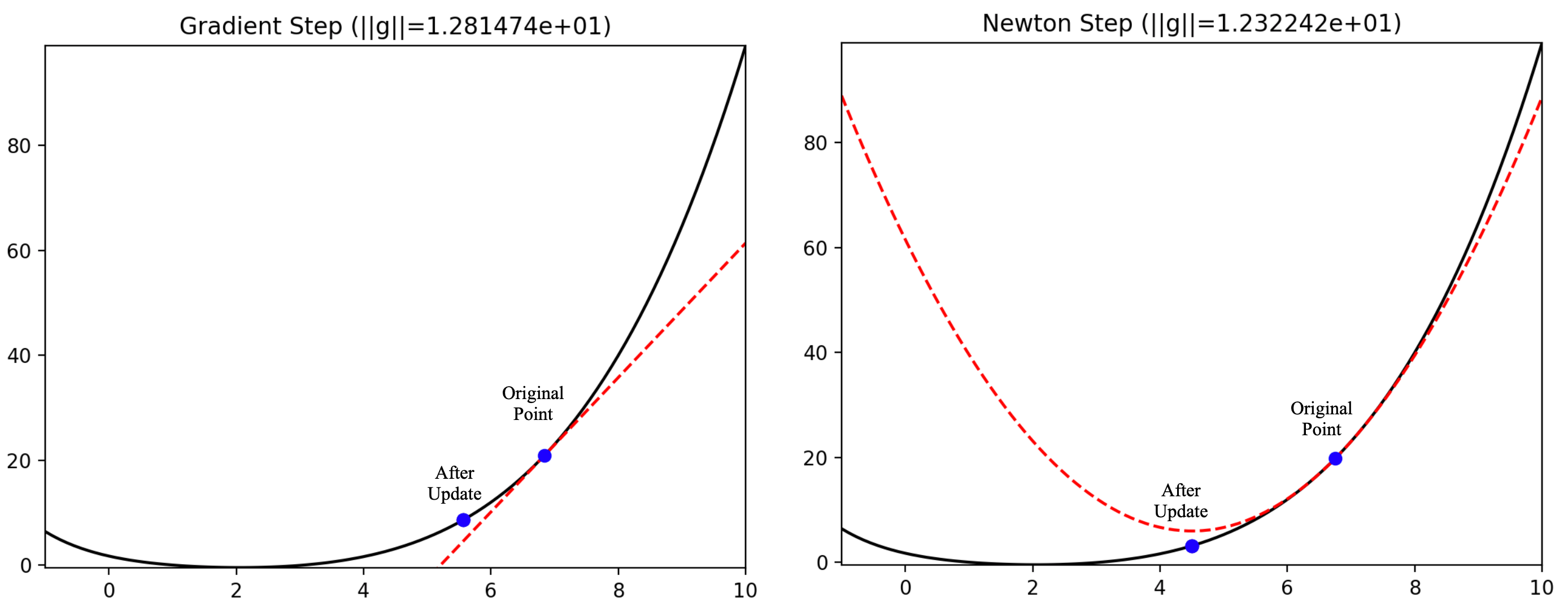

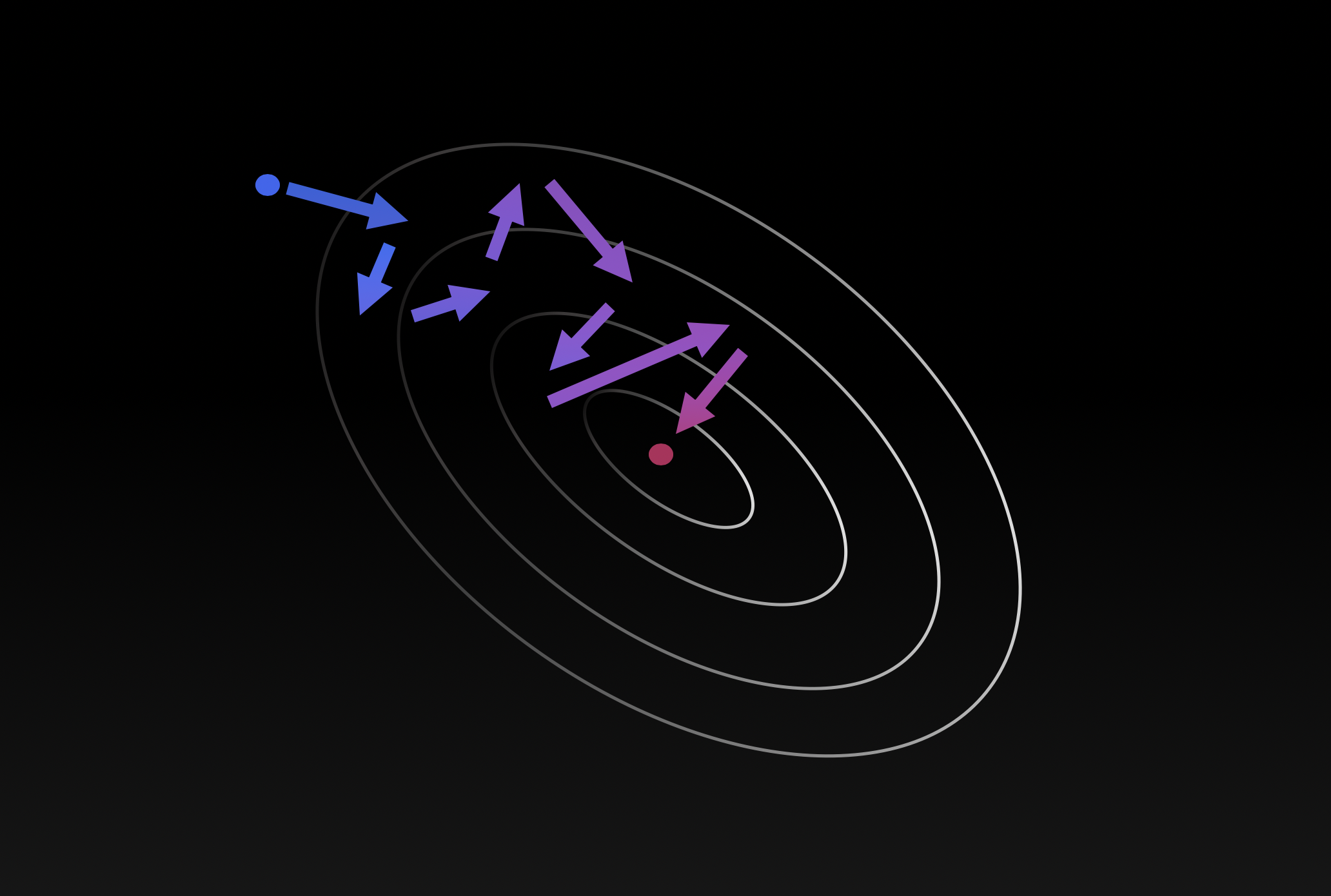

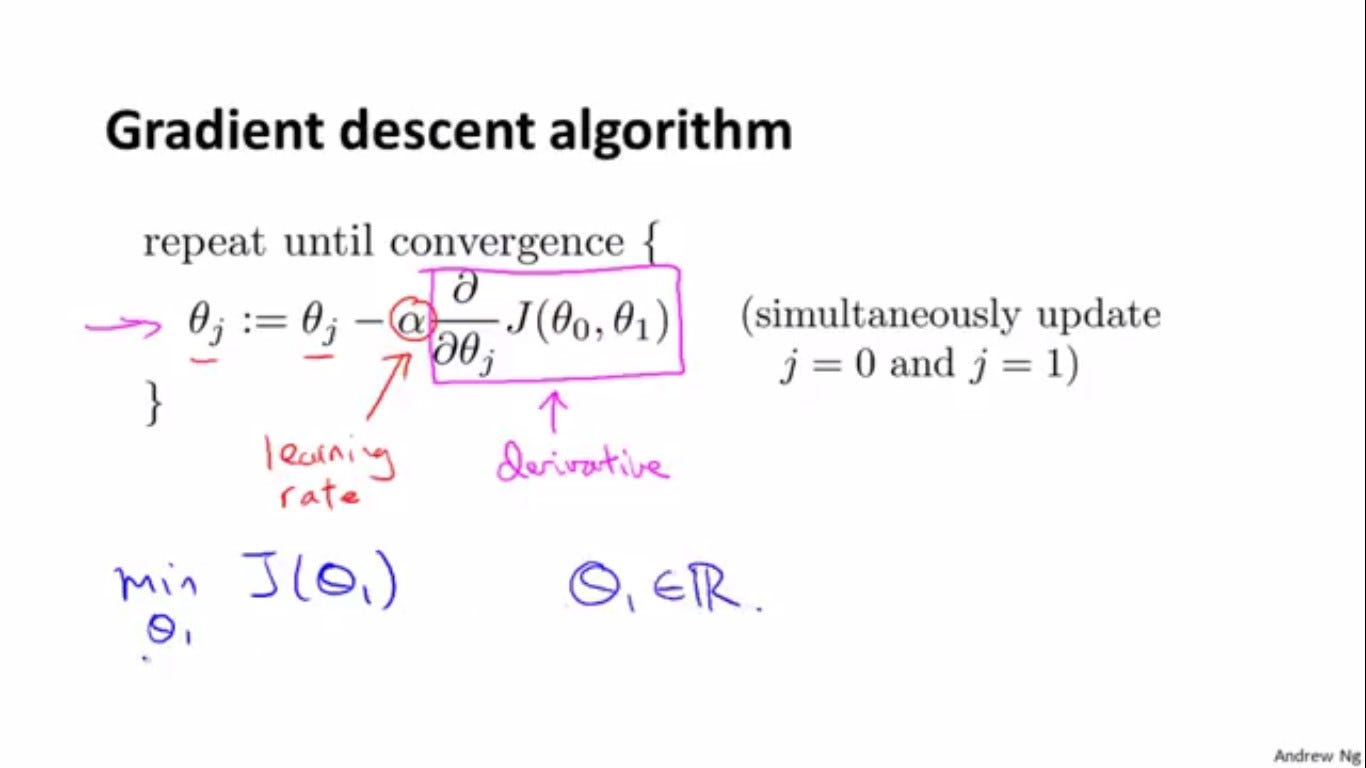

In the gradient descent method of optimization, a hypothesis function, $h_\boldsymbol{\theta}(x)$, is fitted to a data set, $(x^{(i)}, y^{(i)})$ ($i=1,2,\cdots,m$) by minimizing an associated cost function, $J(\boldsymbol{\theta})$ in terms of the parameters $\boldsymbol\theta = \theta_0, \theta_1, \cdots$. The cost function describes how closely the hypothesis fits the data for a given choice of $\boldsymbol \theta$.

4. A Beginner's Guide to Gradient Descent in Machine Learning, by Yennhi95zz

Subgradient Method and Stochastic Gradient Descent – Optimization in Machine Learning

An overview of gradient descent optimization algorithms

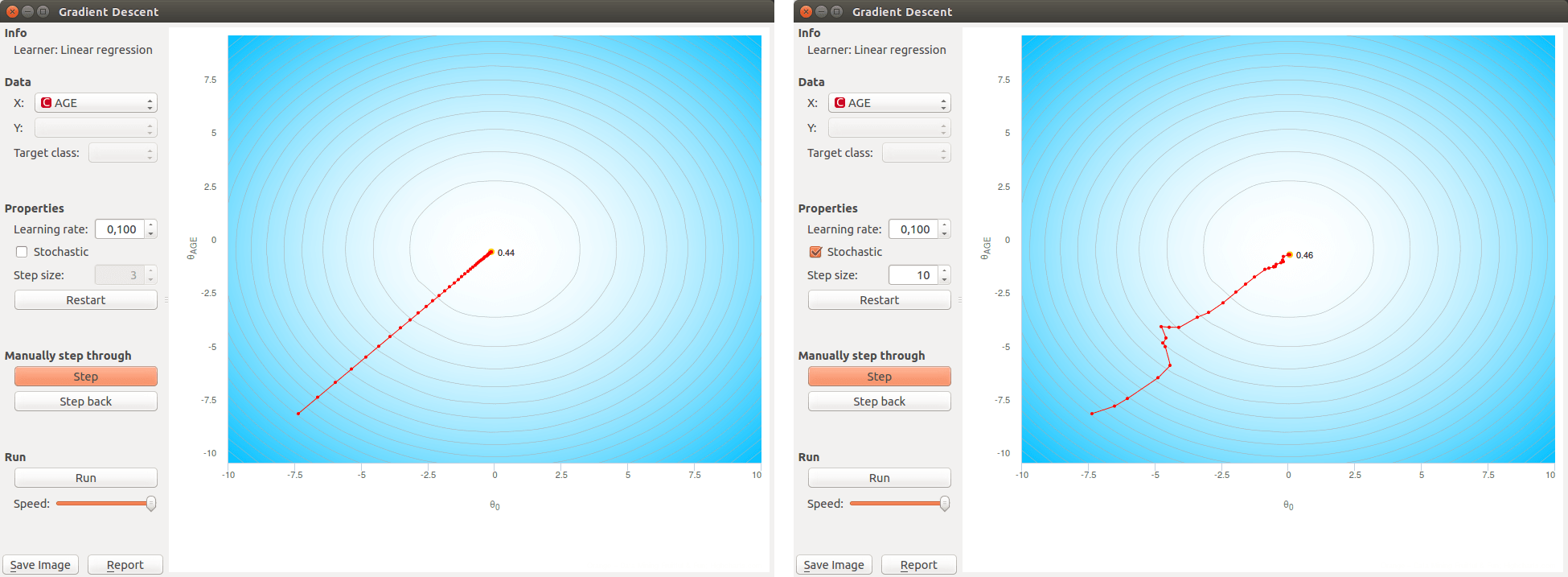

Orange Data Mining - Visualizing Gradient Descent

Simplistic Visualization on How Gradient Descent works

Visualizing the gradient descent in R · Snow of London

Lecture 7: Gradient Descent (and Beyond)

ZO-AdaMM: Derivative-free optimization for black-box problems - MIT-IBM Watson AI Lab

Variance Reduction Methods

How Gradient Descent Algorithm Works - Dataaspirant

Recomendado para você

-

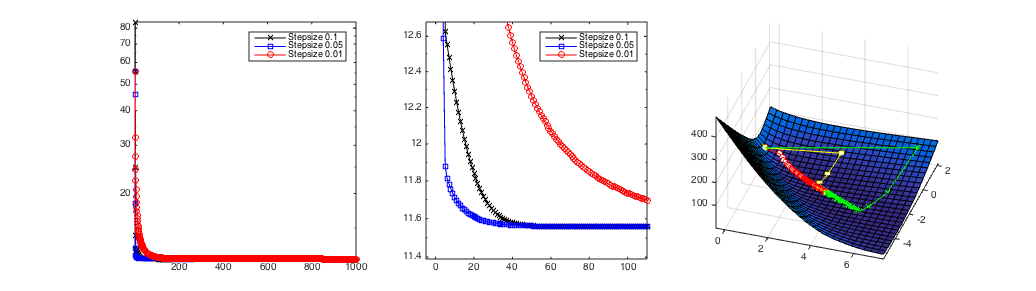

The steepest descent algorithm.28 março 2025

The steepest descent algorithm.28 março 2025 -

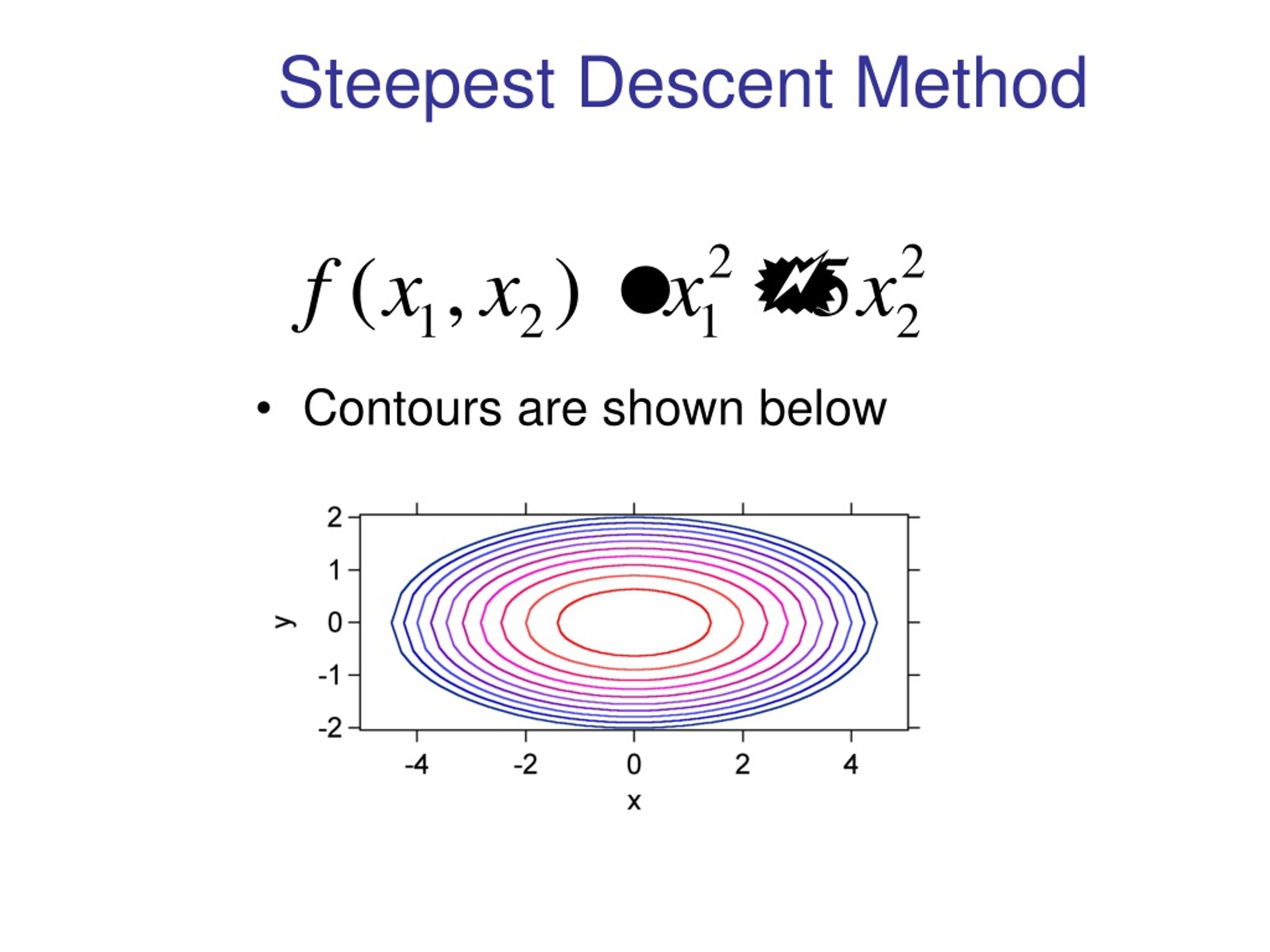

PPT - Steepest Descent Method PowerPoint Presentation, free download - ID:921260528 março 2025

PPT - Steepest Descent Method PowerPoint Presentation, free download - ID:921260528 março 2025 -

Machine learning (Part 8). Understanding the Role of Alpha and…, by Coursesteach28 março 2025

Machine learning (Part 8). Understanding the Role of Alpha and…, by Coursesteach28 março 2025 -

Lecture 8: Gradient Descent (and Beyond)28 março 2025

Lecture 8: Gradient Descent (and Beyond)28 março 2025 -

The A-Z Guide to Gradient Descent Algorithm and Its Types28 março 2025

The A-Z Guide to Gradient Descent Algorithm and Its Types28 março 2025 -

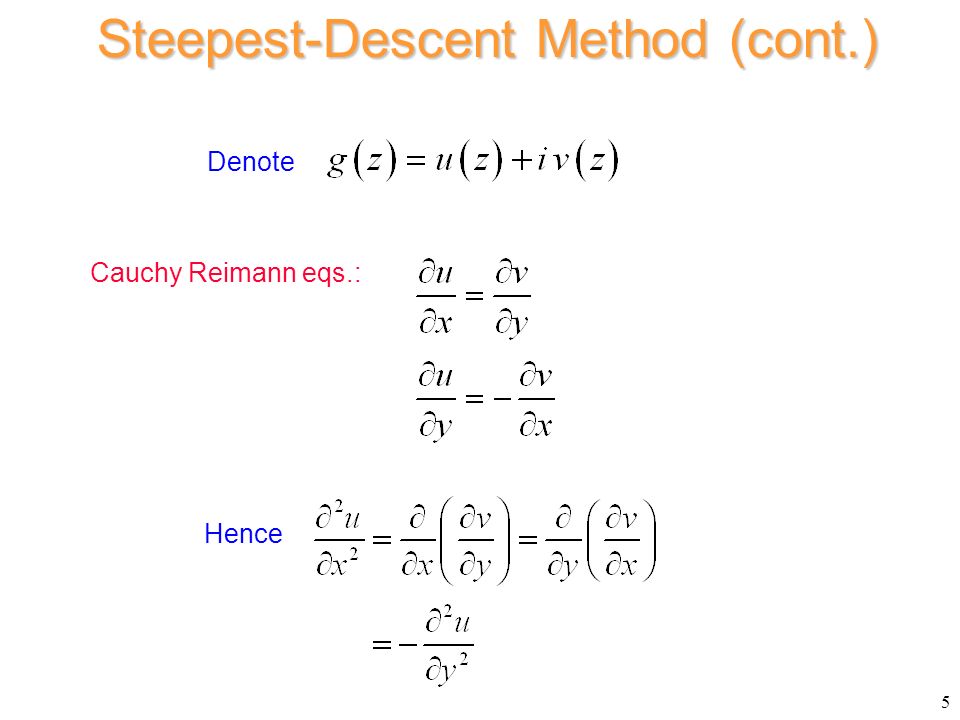

The Steepest-Descent Method - ppt video online download28 março 2025

The Steepest-Descent Method - ppt video online download28 março 2025 -

python - Steepest Descent Trace Behavior - Stack Overflow28 março 2025

python - Steepest Descent Trace Behavior - Stack Overflow28 março 2025 -

5.5.3.1.1. Single response: Path of steepest ascent28 março 2025

5.5.3.1.1. Single response: Path of steepest ascent28 março 2025 -

Steepest Ascent and Steepest Descent28 março 2025

Steepest Ascent and Steepest Descent28 março 2025 -

nonlinear optimization - Do we need steepest descent methods, when minimizing quadratic functions? - Mathematics Stack Exchange28 março 2025

nonlinear optimization - Do we need steepest descent methods, when minimizing quadratic functions? - Mathematics Stack Exchange28 março 2025

você pode gostar

-

Tower of God: NEW WORLD is available for download : r/gachagaming28 março 2025

Tower of God: NEW WORLD is available for download : r/gachagaming28 março 2025 -

Defensa Siciliana Variante Najdorf Alberik o Kelly La28 março 2025

-

1:16 Scale High Speed Remote Control RC Trucks 4x4 Racent - EXHOBBY28 março 2025

1:16 Scale High Speed Remote Control RC Trucks 4x4 Racent - EXHOBBY28 março 2025 -

Keep On - Tri.Version (Digimon Adventure Tri. 4: Loss Outro Theme) (Aim)28 março 2025

Keep On - Tri.Version (Digimon Adventure Tri. 4: Loss Outro Theme) (Aim)28 março 2025 -

s 'Lord of the Rings': Everything You Need to Know – IndieWire28 março 2025

s 'Lord of the Rings': Everything You Need to Know – IndieWire28 março 2025 -

Auction Prices Realized Tcg Cards 2009 Pokemon Platinum Giratina28 março 2025

Auction Prices Realized Tcg Cards 2009 Pokemon Platinum Giratina28 março 2025 -

![Review] The 2014 Oscar-Nominated Short Films: Documentary](https://thefilmstage.com/wp-content/uploads/2014/01/oscarshorts2014-poster.jpg) Review] The 2014 Oscar-Nominated Short Films: Documentary28 março 2025

Review] The 2014 Oscar-Nominated Short Films: Documentary28 março 2025 -

Filmes de terror para assistir na noite de Halloween. Parte 228 março 2025

Filmes de terror para assistir na noite de Halloween. Parte 228 março 2025 -

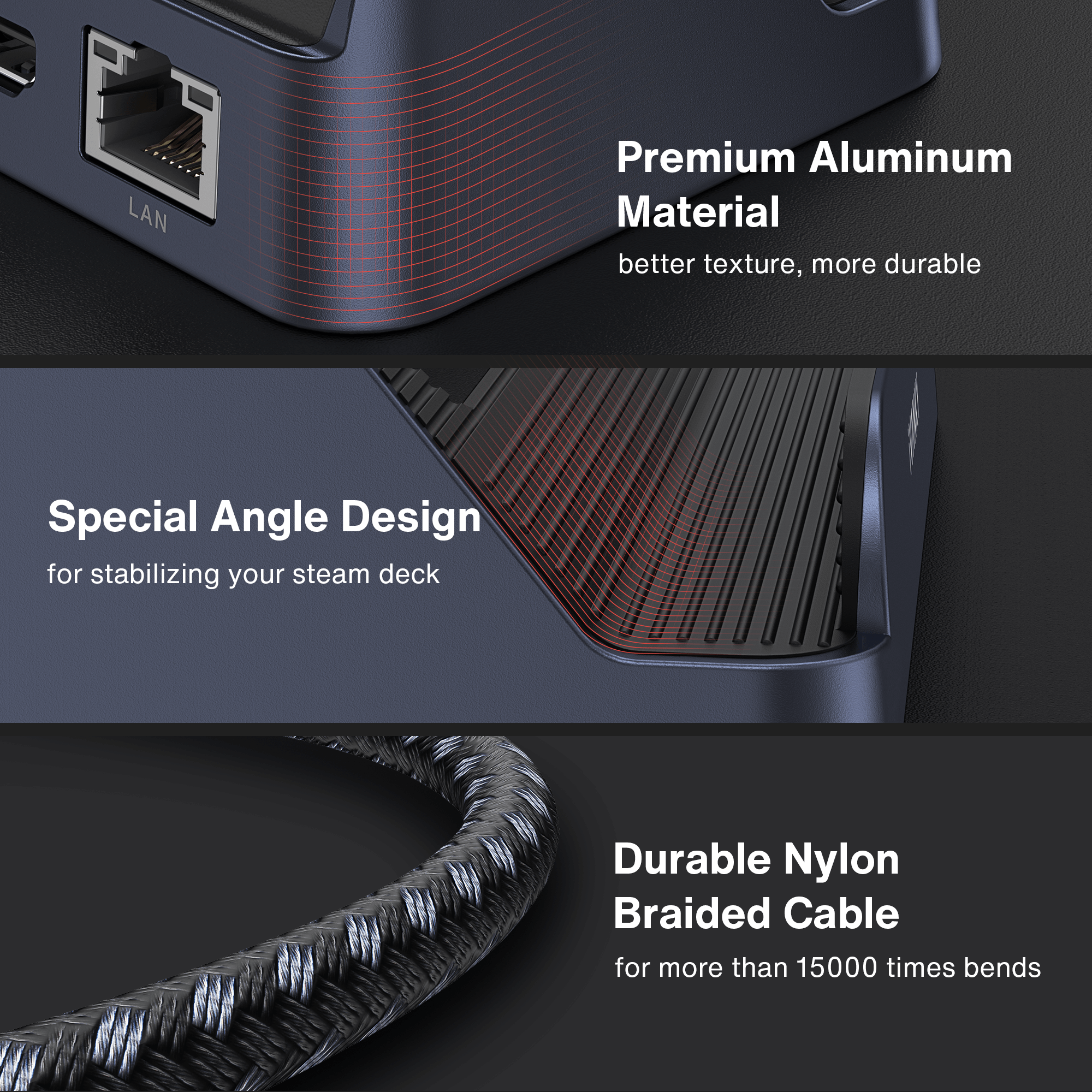

iVANKY ROG Ally Dock 6-in-128 março 2025

iVANKY ROG Ally Dock 6-in-128 março 2025 -

Rüzgarın Kalbi neden yayından kaldırıldı?28 março 2025

Rüzgarın Kalbi neden yayından kaldırıldı?28 março 2025